Non-Unital Noise Adds a New Wrinkle to the Quantum Supremacy Debate

Written By Claire Fu

Just thirty years ago, quantum computing was nothing more than an idea. Since then, increasing research has been conducted to bring us closer to a reality where quantum computers exist alongside classical computers, or even replace them. Research has shown that upon being assigned random problem solving tasks, a quantum computer seemingly performs significantly better than a classical computer when executing the code.

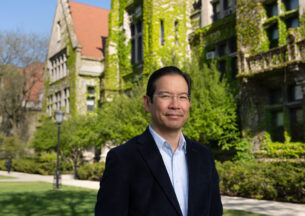

“Essentially, the only thing that current large scale near-term quantum experiments have done even today, is what we call random circuit sampling, which amounts to picking random pieces of code and running them,” Assistant Professor Bill Fefferman at the University of Chicago Department of Computer Science states. “Even though this is not known to be particularly useful, the problem that it’s solving by just choosing random lines of code quantumly actually does give a significant computational advantage that a classical computer, like the computer on your desk, would not be able to solve.”

The idea that current quantum experiments can solve certain specific tasks faster than any classical computer — coined “quantum supremacy” — was first demonstrated by Google in 2019, when they claimed that a quantum computer could perform a series of operations in 200 seconds where a classical supercomputer would take 10,000 years. However, there is a caveat which the theory behind quantum supremacy hadn’t considered in detail before the experiment actually happened: the effects of error induced by imperfections in the experiment, for instance the use of noisy gates.

“Google’s output signal, albeit still non-trivial, was drowned in a sea of noise,” said fourth-year graduate student Soumik Ghosh. “For their largest circuits, they only got a signal fidelity of 0.1%. Because noise played such a crucial role in their experiments, a series of works since then have focused on quantifying the difficulty of simulating their experiment, by taking into account the noise present in their hardware.”

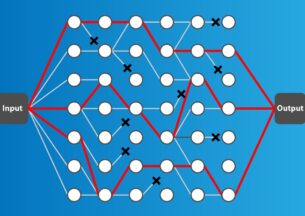

This raises an important new question for the theory of quantum supremacy: what is the most accurate way to mathematically model realistic quantum noise? This itself is a very difficult question that is not yet well understood in large scale quantum experiments. A first attempt is to reductively model noise using the so-called ‘depolarizing’ noise channel, which only increases the entropy of the system. In this case, a recent paper suggested that quantum computing does not possess a scalable advantage over classical computing in random circuit sampling.

However, what complicates the story is that in addition to depolarizing noise, there is another type of noise in real hardware, known as non-unital noise, that had not been well studied in the literature. This noise behaves very differently from depolarizing noise and can potentially decrease the entropy of the system. Then the question becomes, what happens to random circuit sampling under the most realistic hardware conditions, featuring both depolarizing and non-unital noise? In Fefferman’s lab, Ghosh recently posted a paper titled Effect of non-unital noise on random circuit sampling, together with collaborators at the University of Maryland, the University of Waterloo, and IBM Research, which sought to answer this exact question. The paper was a contributed talk at the Quantum Information Processing Annual Meeting in 2024, the most prestigious theory of quantum computing conference in the world.

All previous theoretical results about random quantum circuit experiments made use of anticoncentration — a ‘flatness property’ that means that the output distribution sampled by the experiment is not supported or ‘concentrated’ on a sufficiently small number of outcomes. Anticoncentration has long been believed to be a key ingredient in modeling random circuit sampling, having been first considered by Aaronson and Arkhipov in 2010, several years before the first experimental claims of quantum supremacy. However, by considering the effects of depolarizing noise together with non-unital noise, Ghosh and Fefferman found that, in fact, anticoncentration does not occur.

“We show that if we factor in the effects of realistic noise in quantum hardware, then this task of random circuit sampling is no longer provably hard anymore,” Ghosh states. “All known proofs for quantum supremacy break down. However, at the same time, all known proofs for classical computers demonstrating a similar ability also break down as well. Fundamentally, if we try to faithfully model the noise, then we need a fairly new set of techniques to argue about hardness or lack thereof for random circuits. Existing techniques do not tell us much.”

What does this mean for quantum supremacy? Ghosh and Fefferman do not claim to debunk or support quantum supremacy in either direction. Instead, they are taking this as an opportunity to acknowledge how little we still know and understand about quantum computing. While they seek to study noise in order to provide further evidence that contributes to the quantum supremacy debate, their current results show that all of the algorithms and methods that have been used thus far to study this topic have been fundamentally inaccurate. Through this paper, Ghosh and Fefferman demonstrate that arguments in the presence of simplistic models of noise, in favor of classical stimulability (such as in this paper), do not generalize to realistic noise that is seen in hardware.

“I see this a lot, but in the media people either say that quantum supremacy is completely disproven, or otherwise, that the current generation of quantum supremacy experiments are incredibly hard to simulate classically,” Fefferman emphasizes. “We’re not making either one of these claims. In this paper we’ve established a barrier that makes it difficult for us to understand how hard it is for classical computers to solve random circuit sampling problems because the output distributions of noisy quantum experiments don’t anticoncentrate. This means that when we consider more realistic noise models, we’re going to need new ways of arguing about the system. We are not saying these experiments are easy to simulate classically, and we are not saying these experiments are solving hard problems. We’re saying that we don’t know. It’s a formal statement of our ignorance.”

Moving forward, Ghosh hopes to pave the way for developing new techniques in order to model random quantum circuits with algorithms that incorporate the non-unital noise. Fefferman continues to study the roots of the power of quantum computing, in hopes of understanding what distinguishes quantum from classical computation. To learn more about their research, please visit the UChicago Theory Group’s website here.