Redirecting Hands in Virtual Reality With Galvanic Vestibular Stimulation: UChicago Lab to Present First-of-Its-Kind Work at UIST 2025

Imagine stepping into a flight simulator and, instead of only seeing or hearing cues that your plane is banking left, you actually feel it: your body tilts as if you’re turning, guided not by physical motion but by gentle electrical signals sent to your inner ear. This is the promise of Galvanic Vestibular Stimulation (GVS), a technology already explored for enhancing motion cues in pilot training and rehabilitation. New research shows that GVS can be taken a step further, to help people relearn balance after injury or neurological conditions by tapping directly into the brain’s sense of orientation and movement.

Now, for the first time, researchers at the University of Chicago Department of Computer Science’s Human Computer Integration (HCI) Lab have applied GVS to virtual reality hand movement. This paper, titled Vestibular Stimulation Enhances Hand Redirection, was recently presented at the 2025 ACM Symposium on User Interface Software and Technology (UIST), a globally recognized conference for advances in interface research. The first author, Kensuke Katori, is a student of the UChicago CS Student Summer Research Fellowship Program and joined the HCI lab under the mentorship of PhD student Yudai Tanaka and Associate Professor Pedro Lopes.

“Yudai and I explored GVS for a number of years, sometimes mildly successful and some not,” said Lopes. “Behind all our ideas was that we explored GVS for what it does well (i.e., balance)—however, Ken (Katori) took GVS into a new realm by showing it can solve other important problems in VR, such as the popular case of hand redirection!”

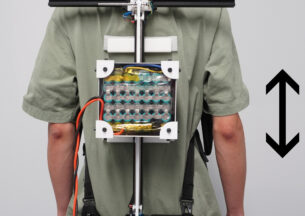

Traditionally, hand redirection in virtual reality has depended primarily on altering a user’s visual field. In this paper, Katori and his fellow researchers present a novel technique that leverages GVS to subtly redirect hand movements in virtual reality by demonstrating how even an imperceptible electrical stimulation of the vestibular system—responsible for balance and spatial orientation—can alter users’ perception of motion and thereby influence hand trajectories. They achieve this entirely without physical force or visual effects, enabling VR designers to use a novel tool that stimulates the body’s natural sense of balance far beyond existing technology. This has the potential to reimagine how people interact with immersive digital worlds, in training, rehabilitation, gaming, and more.

“It was thrilling to see our initial insight about body balance play out in the real world,” Katori said. “When a friend tried our first prototype, they clearly had a harder time detecting the redirection, which confirmed we were onto something important. Trying it myself, the effect of the stimulation was more pronounced than I ever expected; it was a powerful reminder of how surprisingly malleable our senses can be.”

Katori was able to showcase his work to a larger audience at this year’s UIST conference.

“For our talk at UIST 2025, I focused on conveying both the elegance of this method and its potential for future development. At my advisor Pedro’s suggestion, we incorporated a live demo of the vestibular stimulation, which really captivated the audience. The best part was after the talk; people came up to us to brainstorm new use cases and share in the excitement. It created a real sense of shared discovery.”

Potential applications for this technique include VR training systems, where novices can learn correct techniques and gestures through imperceptible feedback, and rehabilitation contexts, where therapists can encourage patients to perform specific motor exercises more effectively. The underlying technology’s ability to influence physical motion in virtual spaces also hints at new immersive entertainment experiences and deeper research possibilities in embodied interaction.